Wikipedia:Wikipedia Signpost/2014-08-20/Op-ed

A new metric for Wikimedia

TL;DR: We should focus on measuring how much knowledge we allow every human to share in, instead of number of articles or active editors. A project to measure Wikimedia's success has been started. We can already start using this metric to evaluate new proposals with a common measure.

In the middle of the night, a man searches frantically for something, walking around a streetlamp. Another one passes, and asks if he may help. “Yes, I'm looking for my car keys; I lost them over there,” he says, pointing to the path leading to the streetlamp. Confused, the other man asks: “Then why are you looking for them here, if you lost them there?” “Well, the light is here.”

This is quite a common pattern. Creating and agreeing on measures and metrics that capture what is really important can often be very hard and sometimes impossible. If we are lucky, the numbers and data that are readily available are a good signal for what is actually important. If we're not lucky, there's no correlation between what we can measure and what we should measure. We may end up like the man searching for his keys.

In my humble opinion, the Wikimedia movement seems to be in a similar situation. For a long time we've been keenly looking at the number of articles. Today, the primary metrics we measure are unique visitors, pageviews, and new and active editors. The Wikimetrics project is adding powerful ways to create cohort-based reports and other metrics, and is adding an enormous amount of value for everyone interested in the development of Wikimedia projects. But are these really the primary metrics we should be keeping an eye on? Is editor engagement the ultimate goal? I agree that these are very interesting metrics; but I'm unsure whether they answer the question: Are we achieving our mission?

What would an alternative look like? I want to sketch out a proposal. It's no ready solution, but I hope it starts the conversation.

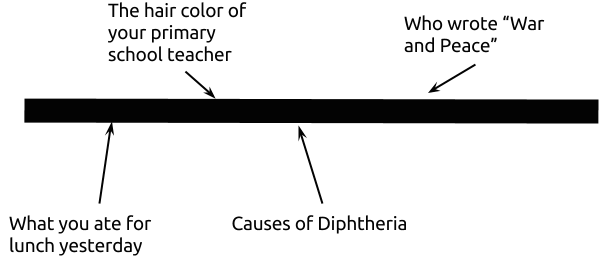

Wikimedia’s vision is “a world, in which every single human being can freely share in the sum of all knowledge”. Let’s start it from there. Imagine this to be all knowledge. A few examples are given.

I don’t want to suggest that knowledge is one-dimensional, but it is a helpful simplification. A lot of knowledge is outside the scope of Wikimedia projects, so let’s cut this out for our next step. I suggest something like a logarithmic scale on this line. A little knowledge goes a long way. Take an analogy: a hundred dollars are much more important for a poor person than for a billionaire; a logarithmic scale captures that. But we also have to remember that knowledge can't be arbitrary ordered—it's not just data, but depends on the reader’s previous knowledge. Finally, let’s sort that knowledge so that we use two colors in the line: the knowledge that is already available to me thanks to Wikimedia (here shown in black), and the knowledge that is not (here shown in white). If we do all this, the above line could look like this:

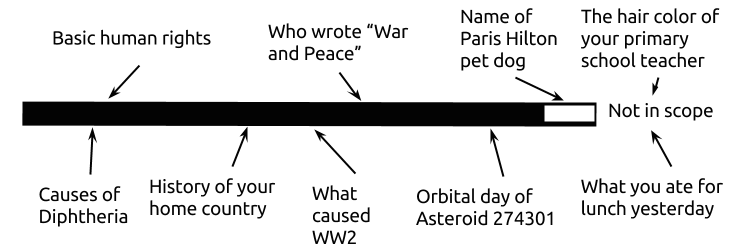

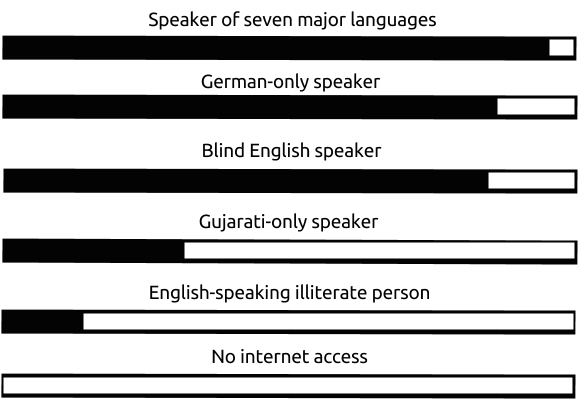

Bear with me—this is just an intuitive estimate, and you might have drawn the line very differently. That’s OK. What is more important is that for every one of us this line looks quite different. For example, a person with a deeper insight into political theories might be able to gain even more from certain articles in Wikipedia than I do. A person who is more challenged by reading long and convoluted sentences might find large parts of the English Wikipedia (or this essay) inaccessible (see, for example, the readability of Wikipedia project or this (German) collection of works on understandability). A person who speaks a different set of languages will read and understand articles that I don’t understand, and vice versa. A person with a more restricted or expensive access to the Internet will have a different line. In the end, we would have more than seven billion such lines—one for every human on the planet.

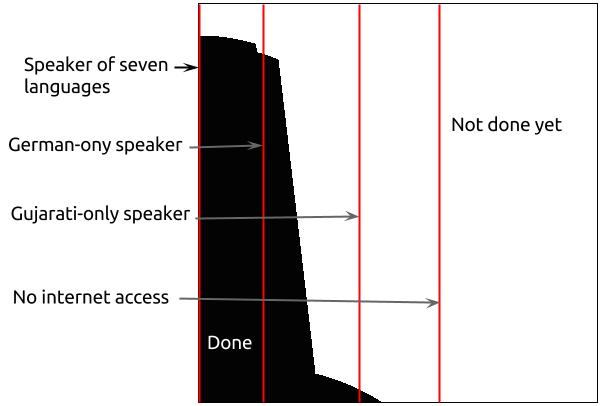

Let us now take all these seven billion lines, turn them sideways, sort them, and then stick them together. The result is a curve. And that curve tells us how far along we are in achieving our vision, and how far we still have to go.

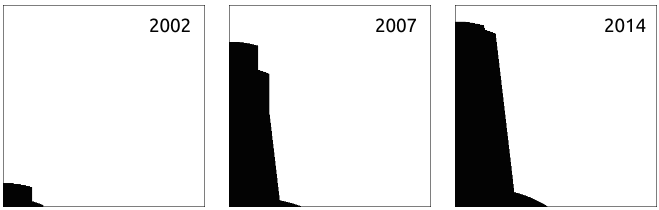

We can estimate this curve at different points in time and see how Wikimedia has evolved in the last few years.

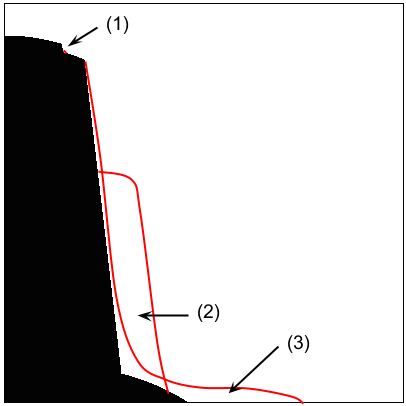

We can visualize an estimation of the effect of different proposals and initiatives on that curve. We can compare the effect of, say, (1) adding good articles about every asteroid to the Alemanic Wikipedia, with (2) bringing the articles for a thousand of the most important topics to a good quality in Malay, Arabic, Hindi, and Chinese, and with (3) providing free access to Wikipedia through mobile providers in Indonesia, India and China. Note that a combination of (2) and (3) would lead to a much bigger area to be covered! (This is left as an exercise to the readers—maybe a reader will add the answer to the comments section.)

All three projects would be good undertakings, and all three should be done. But the question the movement as a whole faces is how to prioritize the allocation of resources. Even though (1) might have a small effect, if volunteers decided to do it they should be applauded and cherished. It would be a weird demand (but nevertheless not unheard of) if Wikipedia was required to be balanced regarding its depth and coverage, if it was required to have articles on the rise and fall of the Roman empire at least as deep and detailed as about the episodes of The Simpsons. The energy and interest of volunteers cannot be allocated arbitrarily. But there are resources that can: priorities in software development and business development by paid staff, or the financial resources available to the Funds Dissemination Committee. Currently, the intended effects of such allocations seem to be hard to compare. With a common measure—like the one suggested here—diverse projects and initiatives could all be compared against a common goal.

There are many open questions. How do we actually measure this metric? How do we know how much knowledge is available, and how much of it is covered? How do we estimate the effect of planned proposals? The conversation about how this metric can be measured and captured had already started on the research pages on Meta, and you are more than welcome to join and add your ideas to it. The WMF's analytics team is doing an astounding job of clearly defining its metrics, and measuring the overall success of Wikimedia should be as thoroughly and collaboratively done as any of these metrics (see also the 2014 talk by the analytics team).

While it would be fantastic to have a stable and good metric at hand, this is not required for the idea to be useful. The examples above show that we can argue by using the proposed curve intuitively. Over time, I expect us to achieve an improved understanding and intuition about how to derive an increasingly precise curve—maybe even an automatically updated, collaboratively created online version of the curve, based on the statistics, metrics and data available from the WMF. This curve we could just look up, but the expectation already would be that we have a fairly common understanding of the curve, and what certain changes to the curve would mean.

This would allow us to be more meaningful when speaking about our goals, and would free us from volatile metrics that don’t really express what we're trying to achieve; that way, we could stop looking solely at numbers, such as number of articles, pageviews, and active editors. Most of the metrics we currently look at are expected to have a place in deriving the proposed curve, but we have to realize that they're not the primary metrics we need improve if we're to achieve our common vision.

- Denny Vrandečić is a researcher and has received his PhD from Karlsruhe Institute of Technology in Germany. He is one of the original developers of Semantic MediaWiki, was project director of Wikidata while working for Wikimedia Germany, and now works for Google. A Wikipedian since 2003, he was the first administrator and bureaucrat of the Croatian Wikipedia.

- Acknowledgements and thanks go to Aaron Halfaker, Atlasowa, and Dario Taraborelli for their comments and contributions this article. You can follow Vrandečić on Twitter.

- The views expressed in this opinion piece are those of the author only; responses and critical commentary are invited in the comments section. Editors wishing to propose their own Signpost contribution should email the Signpost's editor in chief.

Discuss this story

Hi Denny, good article and interesting topic.

I quite agree! Also wanted to point out a new tool I've built, Quarry that lets people explore our databases for research purposes from a web friendly way and share the results. Might be useful for less technically minded Wikimedians who want more power than Wikimetrics :) YuviPanda (talk) 12:55, 25 August 2014 (UTC)[reply]

Thank you for this, this is exactly the kind of discussion we should be having! Jan-Bart de Vreede 217.200.185.43 (talk) 09:05, 27 August 2014 (UTC)[reply]

Don't want to be ultra-pedantic, but you misspelled diphtheria... -- AnonMoos (talk) 04:39, 27 August 2014 (UTC)[reply]

-- AnonMoos (talk) 04:39, 27 August 2014 (UTC)[reply]

Guy who lost keys

Interesting proposal. Just something about the parable 'Guy who lost keys'. I loved the subtlety of this version: pub chat between Hoyle and Feynman: (skip to 24:00).

Some skeptical comments

Denny, this is a great article but I am somewhat skeptical of the metric and especially of its suggested relevance for the allocation of resources. Honestly, I would be worried that funding decisions on the basis of the suggested metric would do more harm than good because they simplify our complex goals too much. To give you at least one example: it seems to me that the metric is biased against small language projects for a number of related reasons:

(a) One of the strengths of small language Wikimedia projects is that they build free knowledge communities and contribute to the preservation of linguistic and other local knowledge. I think that Wikimedia's mission should also include these aspects of knowledge preservation and global community building of people who care about free knowledge. If we do not consider this part of our mission, we could simply slash any support for languages with less than x million monolingual speakers and relocate the resources to Mandarin, Hindi, etc.

(b) If I understand the metric correctly, it represents knowledge in Wikimedia projects in complete isolation from other knowledge resources that users have access to. I'm afraid that this creates biased results given that the crucial question is how people actually use knowledge from Wikipedia and how they integrate it with other knowledge resources (see also my article here). For example, information in small language projects is often so valuable because it is not readily available anywhere else. The metric does not distinguish between this genuinely novel knowledge and knowledge that is readily available through other means such as a quick Google search.

(c) There are also vexed problems with the very notion of the "sum of all knowledge" in Wikimedia's mission. For example, it seems quite intuitive that missing knowledge on the top left of your diagram (e.g. the name of Paris Hilton's dog) tends to be less important than knowledge in the bottom right (e.g. the name of the current US president). Of course, we could adjust the measure so that the name of the US president covers more space in the diagram than the name of a celebrity's dog. But then we face the problem of having to asses the weigh of different pieces of information across of linguistic and cultural contexts.

All three points seem to contribute to a bias against small language projects. Furthermore, similar problems will pop up in other contexts. For example, problem (b) is equally obvious in the case of long specialized articles in English, German, French, etc. I always found long specialized articles to be especially valuable as far too much information in Wikipedia is the result of a quick Google search and essentially redundant to other information resources. However, the suggested metric is completely blind to that and seems to favor superficial work that transcribes readily available knowledge to Wikipedia over the work of editors who spend a lot of time in libraries and archives to free knowledge that is actually locked away.

Don't get me wrong: I think that the metric is very interesting and helpful in focusing some important issues. However, I do not think that one simple metric can give reliable advise for complex questions of funding etc. Cheers, David Ludwig (talk) 20:03, 27 August 2014 (UTC)[reply]