January 24, 2025 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Self-adaptive LLM dynamically adjusts its weights to learn new tasks

A trio of AI researchers at Sakana AI, a Japanese startup, has announced the development of a self-adaptive AI LLM called Transformer2. Qi Sun, Edoardo Cetin, and Yujin Tang, have posted their paper on the arXiv preprint server.

As LLMs mature, AI researchers continue to refine them to be more efficient and less energy demanding. In this new study, the research trio has found a way to reduce one of the major inefficiencies in traditional LLMs—the need for fine-tuning if they are asked to do something they have not been trained to do.

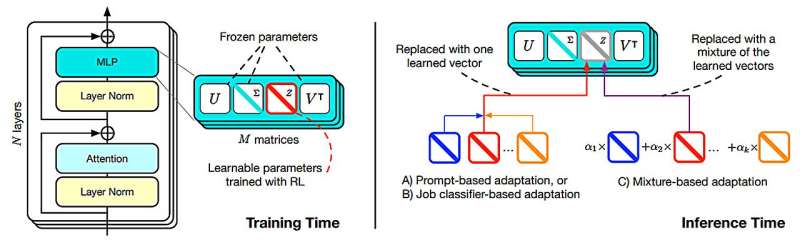

Under current scenarios, an LLM's parameters are adjusted and it is then trained with new samples—afterward, the new parameters remain frozen in place. The research team has introduced a model that makes adjustments to a system of weights when it is introduced to something new, to allow it to adjust dynamically to new types of tasks.

To allow the LLM to carry out dynamic adjustments, the researchers have split the task response into a two-step approach; the first involves analyzing the request and figuring out what will be required to provide a good response. The second involves making adjustments to a system of weights to help it focus its efforts on things that will lead to an answer.

The system of weights uses a math process called Singular Value Decomposition to determine which parts of its own AI system are the most important for providing the best possible answer. Reinforcement learning is applied to create the steps needed to guide the AI's behavior.

During inference, (which is the part of the system involved in generating responses to the initial query), the system employs three main strategies to achieve its goals—one that is based on the prompt, another that serves as a classifier and the third that applies a few-shot adaptation process (where an AI model learns from a limited training set). Once the weights have been applied, the LLM carries on in similar fashion to other LLMs.

The overall result of using the new approach is that it allows an LLM to adjust itself on the fly when it finds itself faced with an unfamiliar task. Testing of the system showed it capable of performing as well as other LLMs on traditional queries but much more flexible when it came to answering queries that confused other models.

More information: Qi Sun et al, Transformer2: Self-adaptive LLMs, arXiv (2025). DOI: 10.48550/arxiv.2501.06252

© 2025 Science X Network